I couldn’t figure out why I got two of everything I purchased from Amazon – two copies of a book, two pieces of lavalier microphone, RAM modules for my laptop and cables for my digital audio interface. I went back to my purchase history and realized that I accidentally ordered two of everything. I only wanted two of the RAM modules and cables, not the microphone and the book. No wonder I paid more than what I expected.

Depending on your recovery objectives and service level agreements, designing a SQL Server high availability and disaster recovery (HA/DR) solution will require you to have at least two of everything – two servers for local high availability (HA) plus another one for disaster recovery (DR), two or more copies of your databases, two data centers. You get the idea.

Which is why I started the previous statement with “depending on..”

The Confusion Around Azure Availability Sets

Leveraging Microsoft Azure for DR requires understanding what it offers so you don’t end up accidentally “clicking the button twice.”

Something that is frequently brought up during HA/DR conversations involving Azure is the concept of an availability set. No, this is not SQL Server Availability Group. Availability Sets allow you to logically group one or more (emphasis mine) Azure virtual machines (VM). The Azure platform takes care of where the VMs are placed within the underlying hardware infrastructure. In order to understand this concept better, let’s introduce additional concepts by illustrating a typical server rack inside a data center.

Fault Domains

Modern data centers are filled with racks containing physical servers, just like the image above. Each physical server can run one or more VMs, depending on the available resources. Most system administrators nowadays don’t even know how a rack-mounted server look like because they are all accessed remotely. I still remember the time when we had to move servers across data centers using trolleys. Think Compaq ProLiant servers.

A rack contains a power distribution unit (PDU) that is used to distribute electrical power to all of the mounted physical servers. All of the physical servers are connected to the PDUs which, in turn, are attached to an uninterrupted power supply (UPS) to protect against power interruption. This configuration is to provide availability to the physical servers. A rack also contains a network switch to connect all of the physical servers to the network.

But the reality is, the PDU or the network switch is a single point of failure. If the PDU fails for whatever reason – blown fuse, burnt wiring due to overloading, wear and tear, etc. – all of the physical servers in the rack will be powered off together with all of the VMs running on the servers. Similarly, all of the physical servers get disconnected from the network when the network switch fails.

Given this standard layout of how physical servers are mounted on racks in data centers, the concept of a fault domain is introduced.

According to Microsoft’s documentation, a fault domain defines the group of machines that share a common PDU and network switch. Hence, a fault domain is simply the server rack.

Update Domains

Each physical server on the rack will need to be updated and maintained on a regular basis – OS updates, firmware updates, security patches, hardware replacements, etc. You’re still responsible for the VMs but Microsoft is responsible for the underlying physical server that runs your VM. Because the physical servers need to be maintained, we introduce the concept of an update domain. An update domain is a logical boundary that defines how Microsoft performs planned maintenance. It would not make sense to maintain and/or reboot several servers at the same time (I know this first hand when – almost 12 years ago – two domain controllers in our data center were rebooted at the exact same time). Hence, an update domain is simply the physical server and how they are maintained.

Each fault domain (rack) will have several update domains (physical servers). When you deploy one or more VMs in an Availability Set, Azure will place them in different fault (rack) and update (physical servers) domains. This provides high availability by making sure that the VMs do not end up in the same rack and same physical servers, thus, meeting the 99.95% SLA.

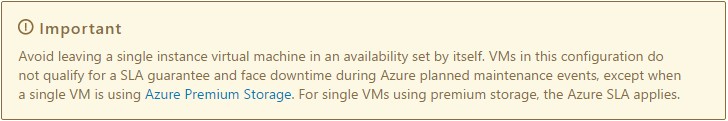

True…until you read the fine print.

It does tell you that you need to have at least more than one VM in an Availability Set to meet the 99.95% SLA. It’s the reason I kept emphasizing one or more.

About that second serving…

You might think that you need more than one VM on Azure to meet that 99.95% SLA. But as I mentioned in the previous blog post, if you don’t need 99.95% SLA for your DR requirements, you might want to hold off creating that Availability Set. And maybe ask your boss if he can take you out for lunch. You just saved the company a few hundred (or even thousand) dollars a month.

Please note: I reserve the right to delete comments that are offensive or off-topic.